| HA |

TAB |

SIG |

DEZ |

- Right hand high

- Left hand high

- Hands are side by side

- Hands are in contact

- Hands are crossed

|

- The neutral space

- Face

- Left side of face

- right side of face

- chain

- R Shoulder

- L Shoulder

- Chest

- Stomach

- Right Hip

- Left Hip

- Right Elbow

- Left Elbow

|

- Hand Makes no movement

- Hand moves up

- Hand movesdown

- Hand moves left

- Hand moves right

- Hands move apart

- Hands move together

- Hands move in unison

|

- 5

- A

- B

- C

- F

- G

- H

- I

- P

- V

- W

- Y

|

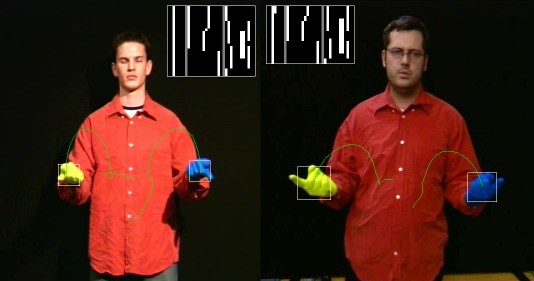

The

figure shows the features generated by the system over time. The horizontal binary vector

shows HA, SIG, TAB and DEZ in that order delineated by grey bands. The consistency in

features produced can clearly be seen between examples of the same word. It is also

possible to decode the vectors back into a textual description of the sign in the same way

one would with a dictionary. The feature vector naturally generalises the motion without

loss in descriptive ability. The figure shows the word ‘different’ being

performed by two different people along with the binary feature vector produced. The

similarity is clear, and the signed words are correctly classified. Linguistic evidence points to the fact that sign recognition is

primarily performed upon the dominant hand (which conveys the majority of information) we

therefore currently discard the non dominant hand and concatenate HA, TAB, SIG and DEZ

features together to produce a 40 dimensional binary vector which describes the shape and

motion in a single frame of video.

The

figure shows the features generated by the system over time. The horizontal binary vector

shows HA, SIG, TAB and DEZ in that order delineated by grey bands. The consistency in

features produced can clearly be seen between examples of the same word. It is also

possible to decode the vectors back into a textual description of the sign in the same way

one would with a dictionary. The feature vector naturally generalises the motion without

loss in descriptive ability. The figure shows the word ‘different’ being

performed by two different people along with the binary feature vector produced. The

similarity is clear, and the signed words are correctly classified. Linguistic evidence points to the fact that sign recognition is

primarily performed upon the dominant hand (which conveys the majority of information) we

therefore currently discard the non dominant hand and concatenate HA, TAB, SIG and DEZ

features together to produce a 40 dimensional binary vector which describes the shape and

motion in a single frame of video.

Classification stage II: Each sign is

modelled as a 1st order Markov chain in which each state in the chain represents a

particular set of feature vectors (denoted symbols below) from the stage I classification.

The Markov chain encodes temporal transitions of the signer's hands. During

classification, the chain which produces the highest probability of describing the

observation sequence is deemed to be the recognised word. In the training stage, these

Markov chains may be learnt from a single training example.

Robust Symbol Selection: An appropriate mapping from stage I

feature vectors to symbols (representing the states in the Markov chains) must be

selected. If signs were produced by signers without any variability, or if the stage I

classification was perfect, then (aside from any computational concerns) one could simply

use a one-to-one mapping; that is, each unique feature vector that occurs in the course of

a sign is assigned a corresponding state in the chain. However, the HA/TAB/SIG/DEZ

representation we employ is binary and signers do not exhibit perfect repeatability. Minor

variations over sign instances appear as perturbations in the feature vector degrading

classification performance.

For example the BSL sign for `Television', `Computer' or

`Picture' all involve an iconic drawing of a square with both hands in front of the

signer. The hands move apart (for the top of the square) and then down (for the side) etc.

Ideally, a HMM could be learnt to represent the appropriate sequence of HA/TAB/SIG/DEZ

representations for these motions. However the exact position/size of the square and

velocity of the hands vary between individual signers as does the context in which they

are using the sign. This results in subtle variations in any feature vector however

successfully it attempts to generalise the motion.

To achieve an optimal feature-to-symbol mapping we apply

Independent Component Analysis (ICA). Termed feature selection, this takes advantage of

the separation of correlated features and noise in an ICA transformed space and removes

those dimensions that correspond to noise. More details are given in our papers.

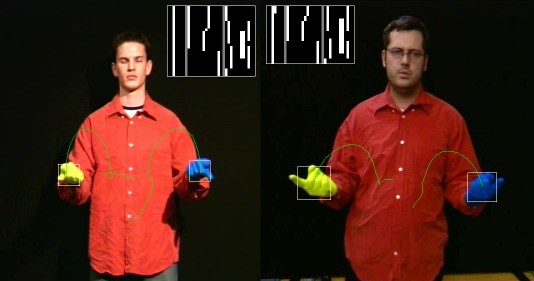

The

figure shows the features generated by the system over time. The horizontal binary vector

shows HA, SIG, TAB and DEZ in that order delineated by grey bands. The consistency in

features produced can clearly be seen between examples of the same word. It is also

possible to decode the vectors back into a textual description of the sign in the same way

one would with a dictionary. The feature vector naturally generalises the motion without

loss in descriptive ability. The figure shows the word ‘different’ being

performed by two different people along with the binary feature vector produced. The

similarity is clear, and the signed words are correctly classified. Linguistic evidence points to the fact that sign recognition is

primarily performed upon the dominant hand (which conveys the majority of information) we

therefore currently discard the non dominant hand and concatenate HA, TAB, SIG and DEZ

features together to produce a 40 dimensional binary vector which describes the shape and

motion in a single frame of video.

The

figure shows the features generated by the system over time. The horizontal binary vector

shows HA, SIG, TAB and DEZ in that order delineated by grey bands. The consistency in

features produced can clearly be seen between examples of the same word. It is also

possible to decode the vectors back into a textual description of the sign in the same way

one would with a dictionary. The feature vector naturally generalises the motion without

loss in descriptive ability. The figure shows the word ‘different’ being

performed by two different people along with the binary feature vector produced. The

similarity is clear, and the signed words are correctly classified. Linguistic evidence points to the fact that sign recognition is

primarily performed upon the dominant hand (which conveys the majority of information) we

therefore currently discard the non dominant hand and concatenate HA, TAB, SIG and DEZ

features together to produce a 40 dimensional binary vector which describes the shape and

motion in a single frame of video.